How to Create Kubernetes Clusters on AWS?

Learn step by step, How to Create & Set up Kubernetes, Google’s open-source container orchestration system on AWS?

Kubernetes is a powerful and widely used container orchestration platform that allows developers to easily manage and scale their applications. Amazon Web Services (AWS) is one of the most popular cloud providers in the world, offering a wide range of services including Kubernetes cluster management. Some of the tools that can be found in a Kubernetes environment include Grafana, Prometheus, Istio, and others. In this article, we will show you how to create & set up Kubernetes clusters on AWS, Step-by-Step.

What is Kubernetes?

Kubernetes is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications. It provides a flexible and scalable infrastructure that allows developers to deploy and manage applications in a highly efficient manner.

Kubernetes uses a master node to control the cluster and worker nodes to run the applications. The master node is responsible for managing the state of the cluster, scheduling applications, and scaling the resources. The worker nodes are responsible for running the applications and providing the resources required by the applications.

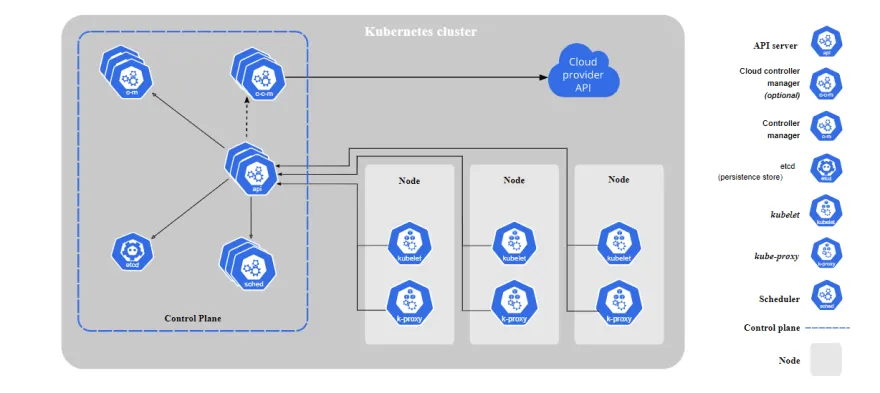

Kubernetes Architecture

Kubernetes architecture consists of the master plane, the nodes, and a distributed storage system, which are clusters.

These are common terms found in Kubernetes architecture.

- Node: A node is a worker machine in Kubernetes. There are master nodes and worker nodes. Master nodes are in charge of managing clusters, while worker nodes host the pods, which host the set of running containers in a cluster. A node is made up of a kubelet, kube proxy, and container runtime.

- Cluster: A cluster is made up of nodes that manage containerized applications. A minimum of one worker node can be found in a cluster. When Kubernetes is deployed, a cluster is formed

- Pod: A pod is a set of running containers in a cluster. It is the smallest deployable unit in Kubernetes.

Components of Kubernetes Architecture

The following are components that make up complete and healthy Kubernetes clusters.

Master Node

The master node consists of the following sub-components.

- Kubectl: The kubectl controls the Kubernetes cluster manager. It is a command line tool.

- Kube API server: This is the front-end section of the master plane that controls the API server. It is designed to deploy several instances and manage their traffic.

- Etcd: etcd represents the space where all cluster data and Kubernetes objects are stored, on a snapshot file. Safe practices while using etcd are periodic backup and encryption of snapshot files. Backup plans while using etcd include the use of etcd built-in snapshot or volume snapshot.

- Kube scheduler: The kube scheduler takes newly created pods and assigns nodes to them. For scheduling to take place, data locality, deadlines, and software and hardware policies are considered, amongst other factors.

- Kube controller manager: A controller is a control loop that watches shared clusters through the API server and attempts to move them to their desired state. The controller manager runs controller processes. Typically, each controller is a separate process, but they are piled together to run as a single process. The various types of controllers include node controller, serviceaccount controller, job controller, and endpointslice controller.

Worker Nodes

The worker or slave nodes are composed of the following sub-components.

- Kubelet: A kubelet is an agent that runs on each node in a cluster.

- Pod: This is a container controlled by a single application that manages the operation of containers.

- Docker: Docker is a containerization platform that runs the configured pods. It operates by running containers from Docker images.

- Kube proxy: It is a network proxy that runs on the worker nodes in a cluster.

Benefits of Kubernetes Architecture

The following are the benefits of Kubernetes' architecture.

- Scalability: Kubernetes architecture is structured to accommodate scalability. This means that containers can be created and used according to the demand for resources.

- Security: Security is prioritized on Kubernetes by isolating containerized applications and encrypting sensitive files such as Kubernetes states and snapshot files. There is also encrypted communication among its components.

- Automation: All tasks that take place are automated. This increases the effectiveness and efficiency of working teams.

- Flexibility and resilience: The architecture permits custom configuration according to the specific needs of a user, with the integration of available tools and processes. It is also resilient and can withstand high traffic.

- Resource management: Resource usage is optimized as containers are automatically scheduled on available nodes in a cluster.

Kubernetes on Cloud

AWS

Why use AWS for Kubernetes?

AWS is a popular cloud provider that offers a wide range of services, including Kubernetes cluster management. AWS provides a highly available, secure, and scalable infrastructure for running Kubernetes clusters. With AWS, you can focus on developing and deploying your applications without worrying about the underlying infrastructure.

Set up Kubernetes on AWS

In order to set up Kubernetes on Amazon Web Services (AWS), you need to create an account.

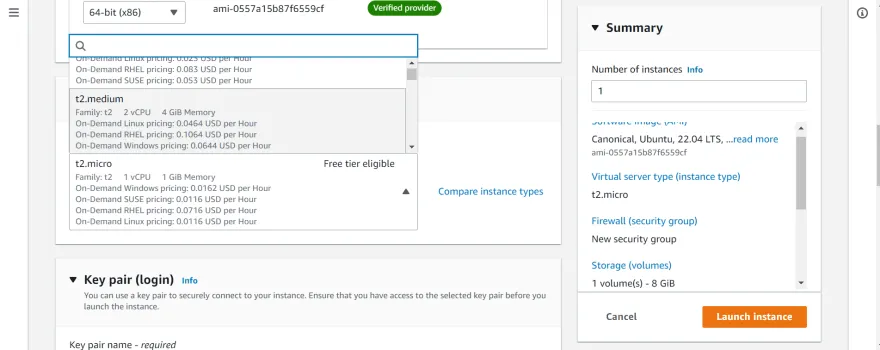

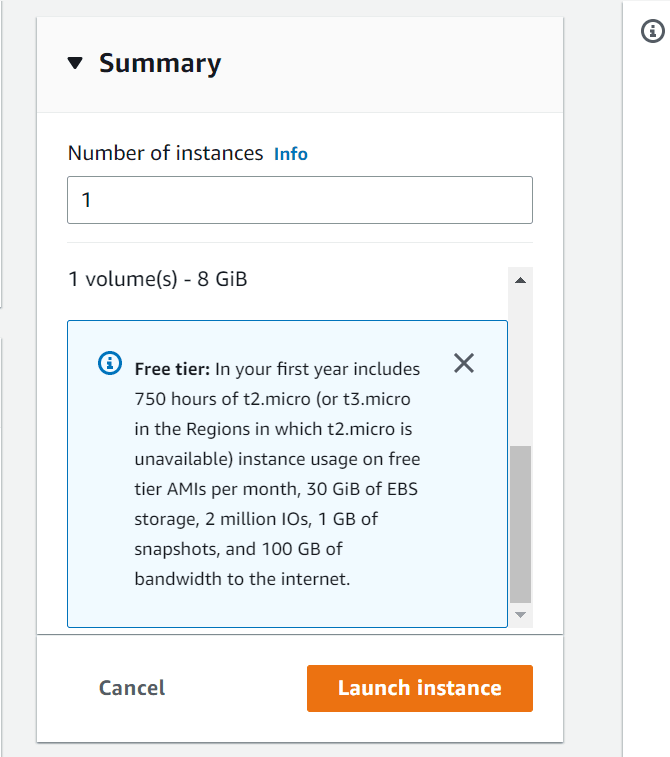

1. The first step is to create an EC2 instance.

Give the instance a name of your choice, and change its instance type to t2.medium.

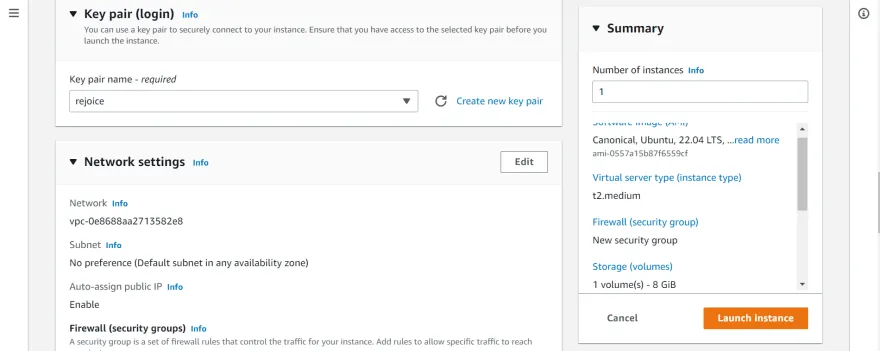

2. Choose a key pair, or create a new Key pair.

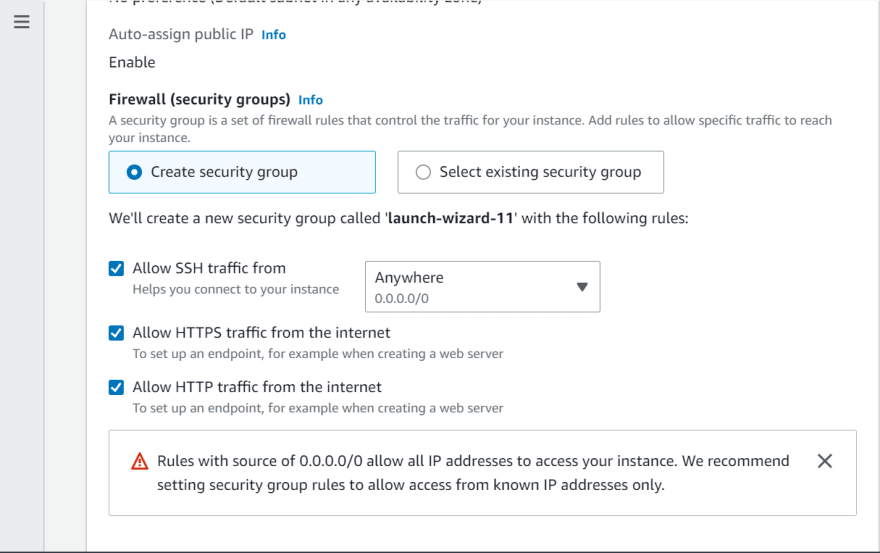

3. Allow HTTP & HTTPS Traffic

For network settings, leave them as default, but allow HTTP and HTTPS traffic from the internet. Leave the Configure Storage section as it is.

4. Proceed to Launch the instance.

5. The instance has been successfully created.

Wait for few minutes instance will be ready. Proceed to view the instance created, and click on the checkbox on the left to select it.

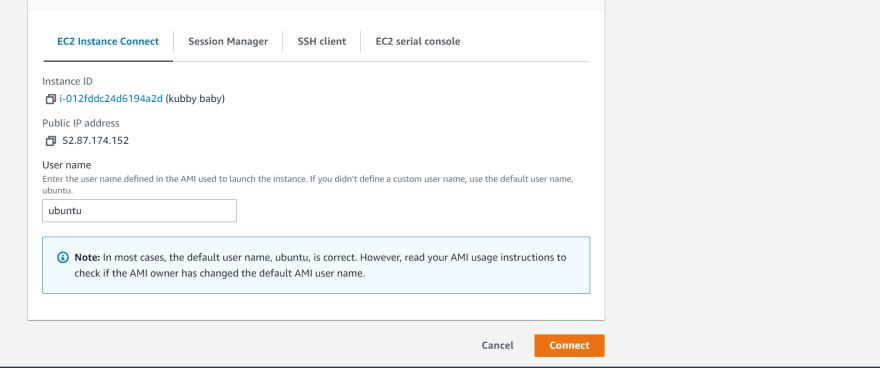

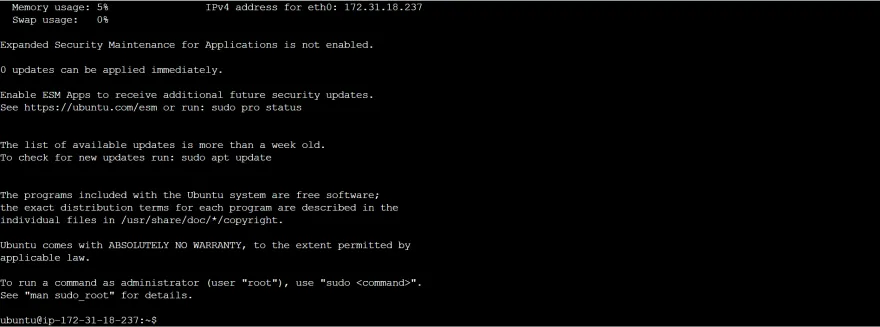

6. Connect to Instance Terminal via SSH

There are four options for connecting an instance. You can Connect to the instance via AWS in-browser SSH or Another SSH Client.

7. It automatically opens a command line interface.

Clear the introductory messages to have a clear workspace.

This article makes use of commands embedded in custom shell scripts that enable the installation of required tools, such as Docker, minikube, and kubectl, and their dependencies. This is a faster method, as it saves time and resources.

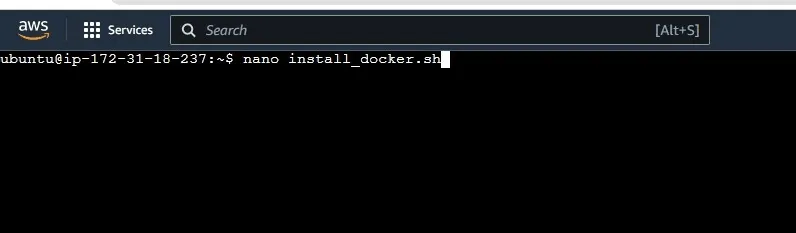

8. Create a shell script using nano.

nano install_docker.sh

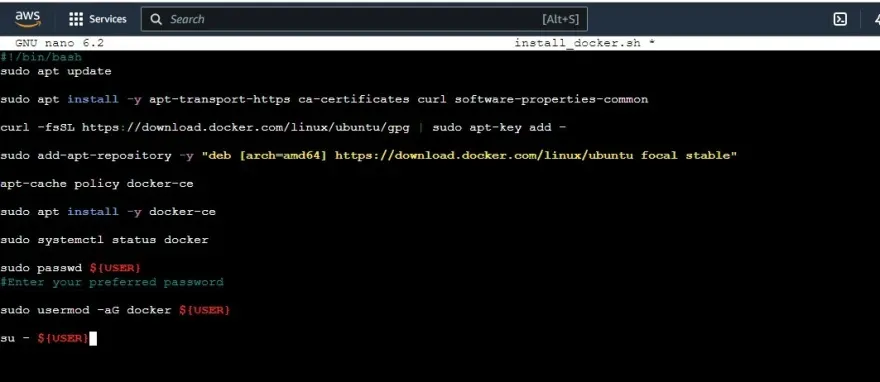

#!/bin/bash

sudo apt update

sudo apt install -y apt-transport-https ca-certificates curl software-properties-common

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add -

sudo add-apt-repository -y "deb [arch=amd64] https://download.docker.com/linux/ubuntu focal stable"

apt-cache policy docker-ce

sudo apt install -y docker-ce

sudo systemctl status docker

sudo passwd ${USER}

#Enter your preferred password

sudo usermod -aG docker ${USER}

su - ${USER}

9. After that, add above commands to the file and save the changes made.

After that, run chmod +x install_docker.sh to make it an executable file. This installs Docker.

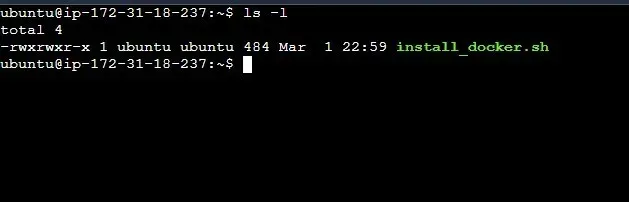

10. Use ls -l to view the changes made to the files.

When a file is green, as shown above, it means that it is executable.

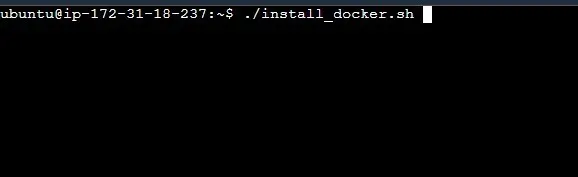

11. Run ./install_docker.sh for dependencies.

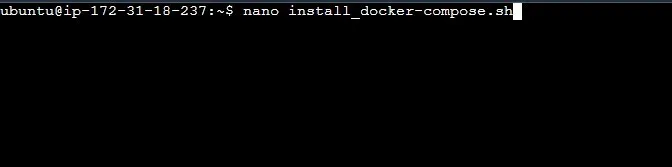

12. Run nano install_docker-compose.sh to Install docker-compose.

#!/bin/bash

groups

sudo curl -L "https://github.com/docker/compose/releases/download/1.29.2/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose

sudo chmod +x /usr/local/bin/docker-compose

docker-compose --version

13. Save the script, and then run chmod +x docker-compose.sh

This makes the file executable.

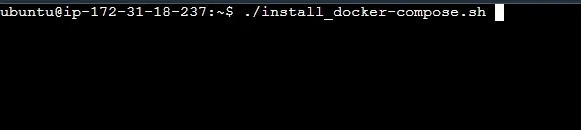

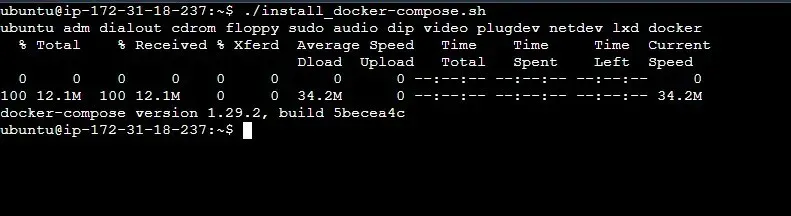

14. The command ./install_docker-compose.sh installs the dependencies docker-compose needs to run.

15. This is the output.

After we have installed Docker, docker-compose and their dependencies, the next step is to install minikube.

Minikube allows single-node Kubernetes clusters to run on your local machine. It also manages its components, ensuring they are integrated and functioning properly.

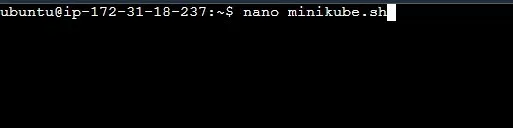

16. To install minikube, run: nano minikube.sh or nano install minikube.sh

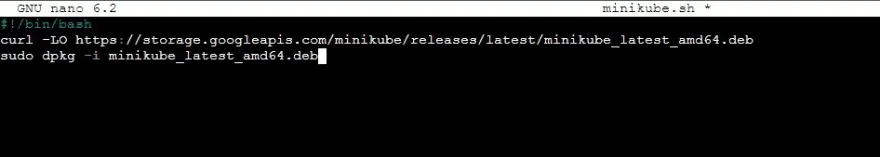

#!/bin/bash

curl -LO https://storage.googleapis.com/minikube/releases/latest/minikube_latest_amd64.deb

sudo dpkg -i minikube_latest_amd64.deb

17. Store the following commands in the script

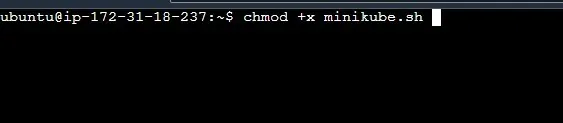

18. Save the file and run chmod +x minikube.sh to make the file executable.

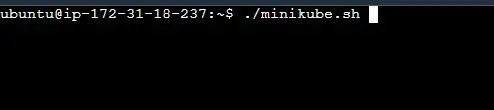

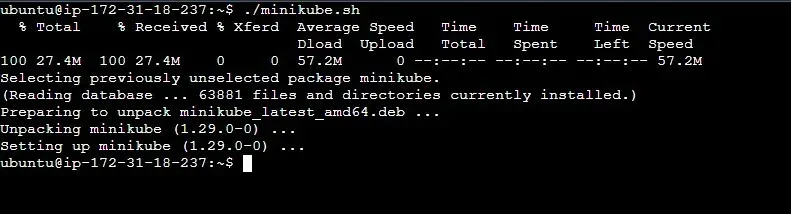

19. Run ./minikube.sh to install minikube’s dependencies.

20. This is the output.

After minikube has been installed, the next step is to install kubectl.

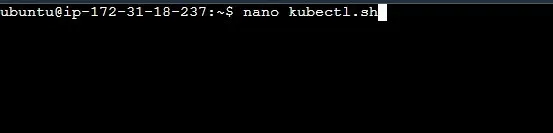

21. To install kubectl. Run nano kubectl.sh

#!/bin/bash

sudo snap install kubectl --classic

22. Store the following command in the shell script

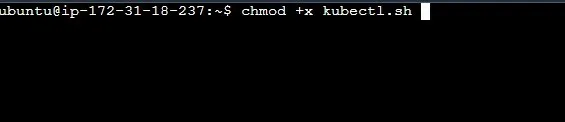

23. Run chmod +x kubectl.shl to make the file executable.

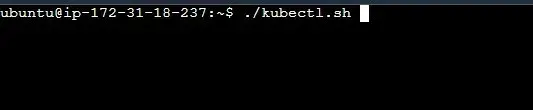

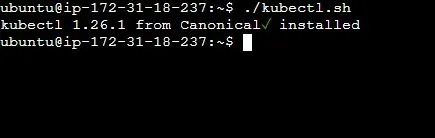

24. Run ./kubectl.sh to install its dependencies.

25. This is the output.

Now that you are done with the installation process, go ahead to start minikube.

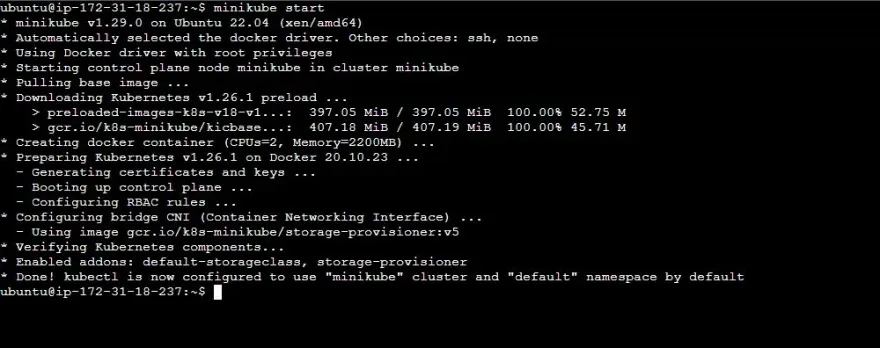

26. Run minikube start

27. This goes ahead to create a single cluster, as seen below.

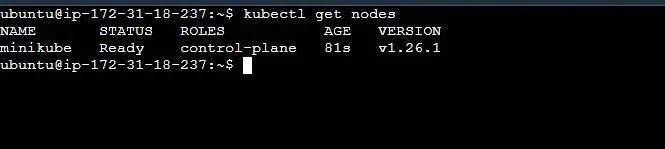

kubectl get nodes is a command used on the Kubernetes CLI to show information about the worker nodes in a cluster. As you can see, a single worker node exists on the control plane.

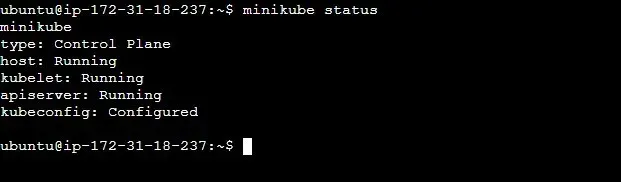

28. To see the status of your cluster, run minikube status.

Now, you have successfully created a Kubernetes cluster on AWS using an EC2 instance. You can go ahead and containerize and deploy applications.

Kubernetes on AWS

Use Cases, Benefits, Drawbacks

Kubernetes on AWS Use Cases

- Kubernetes is used on DevOps workflows to automate the software development process.

- It provides readily available clusters that can be scaled up or down according to the user’s needs.

- It is used to manage applications in hybrid cloud environments, ensuring consistency.

- It encourages continuous delivery by providing a favorable environment for automated testing, building, and deployment.

Benefits of Kubernetes on AWS

- Kubernetes on AWS is cost-effective, allowing organizations to automate the development of their products according to their budget.

- It reduces the manual effort that is associated with traditional software development models.

- Kubernetes on AWS minimizes downtime cases.

- It allows scalability, another cost tolerance effect.

Drawbacks of Kubernetes on AWS

- Security challenges may arise from misconfigurations or vulnerabilities in container images and their underlying infrastructure.

- Organizations that use Kubernetes on AWS find it to migrate to other cloud platforms, which leads to vendor lock-in. This is because AWS is a proprietary platform.

- Kubernetes is a complex tool and cannot be used properly without the right skill set.

- Hiring skilled professionals to manage these tools can be quite expensive.

Cost Optimization

Cost optimization is essential when running Kubernetes on cloud platforms. Due to excessive resource usage and inefficient application deployment, Kubernetes can incur unwanted costs despite its benefits as a container orchestration tool.

Cost optimization can be achieved by right-sizing clusters, autoscaling, and making proper resource allocations. Using container registries can reduce container images; serverless computing helps with scalability; spot instances can make clusters function with reduced costs.

Security

Security when containerizing and deploying applications is a necessary step. It ensures the safety of applications, infrastructure, and data. Keeping your Kubernetes architecture secure is important because it is a complex machine with isolated, mobile parts, and because it is often run on public cloud platforms with multiple users accessing it.

Security on Kubernetes's architecture can be implemented by the use of firewalls, network segmentation, and container image scanning tools. All of these can identify unusual activity and block security breaches. Using secure communication protocols like HTTPS enforces encryption and prevents data interception.

Scalability

It is imperative to enforce scalability in order to deploy applications across many hosts efficiently. This can be done by designing applications that can scale easily and using Kubernetes operators. Other scalability measures include adding more Kubernetes clusters to manage loads (horizontal scaling), and autoscaling; automatic adjustment of containers in a cluster according to the users’ needs.

Conclusion

Kubernetes is a lightweight tool with a unique architecture that can be used to automate the software development process. Organizations that employ its use witness a drastic improvement in their application development projects. Although there are drawbacks, it benefits greatly outweigh them. To know more about Kubernetes on AWS, check out the official documentation.

FAQs

What is Kubernetes, and why is it used?

Kubernetes is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications. It is used to simplify the deployment of applications and reduce operational overhead.

What are the benefits of using Kubernetes on AWS?

Using Kubernetes on AWS provides benefits such as easy scaling, cost-effectiveness, and high availability.

How do I choose the instance type for my Kubernetes nodes?

You should choose an instance type based on the requirements of your applications, such as CPU, memory, and I/O performance.

Can I use other cloud providers with Kubernetes?

Kubernetes is a cloud-agnostic platform that can be used with other cloud providers such as Google Cloud, Microsoft Azure, and Alibaba Cloud.

What is the difference between Amazon EKS and self-managed Kubernetes?

Amazon EKS is a managed Kubernetes service that automates the deployment, scaling, and management of Kubernetes clusters on AWS. Self-managed Kubernetes requires manual setup, configuration, and management of the Kubernetes cluster.